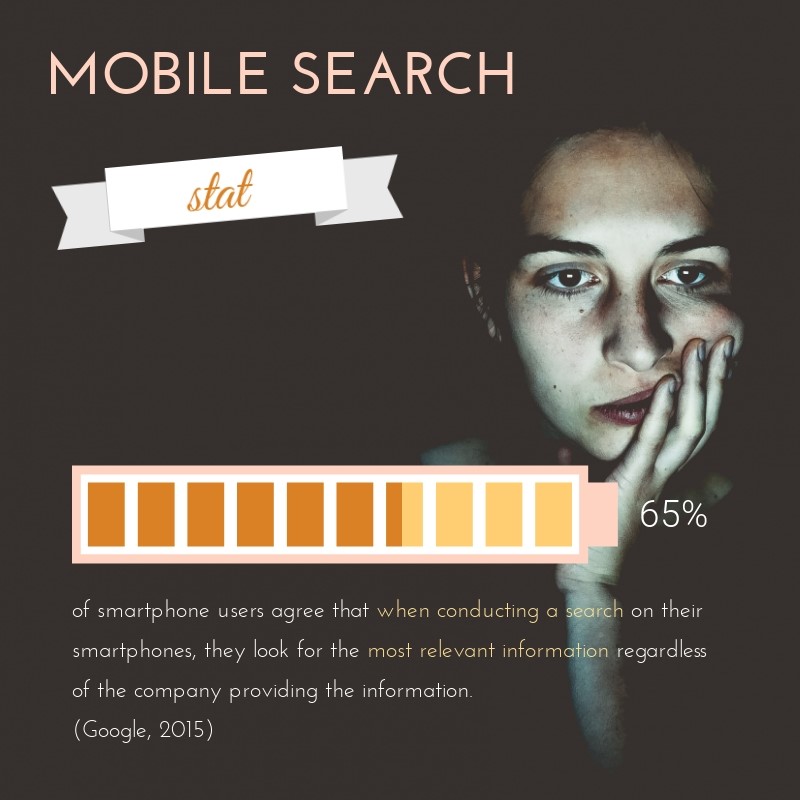

When a patient conducts a search on their smartphone, they are not paying attention to the source of the data if it is relevant to their immediate need. Sometimes, when a doctor is looking for answers to assist a patient with improved vitality you may fall into the same trap. In training, doctors learn how to recognize believable results. A physician in training is encouraged to rely on information from unbiased sources such as peer-reviewed journal. The medical student studies research methods and design. Yet, what if even the most “reliable” sources for medical research data currently available are all “unreliable”?

George Hripcsak MD, chair of biomedical informatics at Columbia University Vagelos College of Physicians and Surgeons states that observational studies, a research design with a track record for poor reproducibility and publication bias by both authors and editors contribute to the divergent medical literature findings in a recent interview.

He is not the only scientist concerned that medical treatments are being based on unreliable data. Cathy O’Neil, PhD, author of Weapons of Math Destruction, and founder of O’Neil Risk Consulting & Algorithmic Auditing has been highlighting these issues on her blog since 2012. Check out this post on observational studies.

Instead, Dr. Hripcsak and his colleagues propose a complete shift away from the current method of carrying out one unique medical research project at a time and then sometimes publishing those results to a method that is high-throughput and has consistent methodology. They have just used their method to evaluate all depression treatments for a set of outcomes.

They simultaneously evaluated:

- 17 depression treatments

- 272 pairs of combination treatments

- 22 outcomes

- 5984 research hypotheses (including 53 truly negative control hypotheses)

- 59,038 covariates

The patient information was used from four different databases. A computer with 32 processing cores and 168 GB of memory took 5 weeks to produce the results. Their model had a 25% increase in no between-database heterogeneity when compared to uncalibrated data between databases. The output of the study was unbiased dissemination of evidence that was more complete and more reliable than that available in the published literature.

What does this mean for practicing physicians and patients? This study is what my friends call a disruptasaurus. A major disruption is on the horizon in scientific and clinical medical research and it may feel uncomfortable to those doctor and medical scientists who are not already agents of change.

If you would like to get another perspective on this topic, listen to Episode 49 of The Doctor’s Mentor Show. If you are ready to jump head first into medical research change, then read the paper below and accept the challenge that Scheumie et al have lain at our feet.

Improving reproducibility by using high-throughput observational studies with empirical calibration

Martijn J. Schuemie, Patrick B. Ryan, George Hripcsak, David Madigan, Marc A. Suchard

Phil. Trans. R. Soc. A 2018 376 20170356; DOI: 10.1098/rsta.2017.0356. Published 6 August 2018